Originally Posted by

jon c.

The Appeal to Authority.

One of the classic rhetorical fallacies.

Ignoring the evidence presented by an Authority on the subject of human interaction with autonomous controls is another.

Professor Mary (Missy) Cummings received her B.S. in Mathematics from the US Naval Academy in 1988, her M.S. in Space Systems Engineering from the Naval Postgraduate School in 1994, and her Ph.D. in Systems Engineering from the University of Virginia in 2004. A naval officer and military pilot from 1988-1999, she was one of the U.S. Navy’s first female fighter pilots. She is a Professor in the George Mason University Mechanical Engineering, Electrical and Computer Engineering and Computer Science departments. She is an American Institute of Aeronautics and Astronautics (AIAA) Fellow, and recently served as the senior safety advisor to the National Highway Traffic Safety Administration. Her research interests include embedded artificial intelligence in safety-critical systems, assured autonomy, human-systems engineering, and the ethical and social impact of technology.

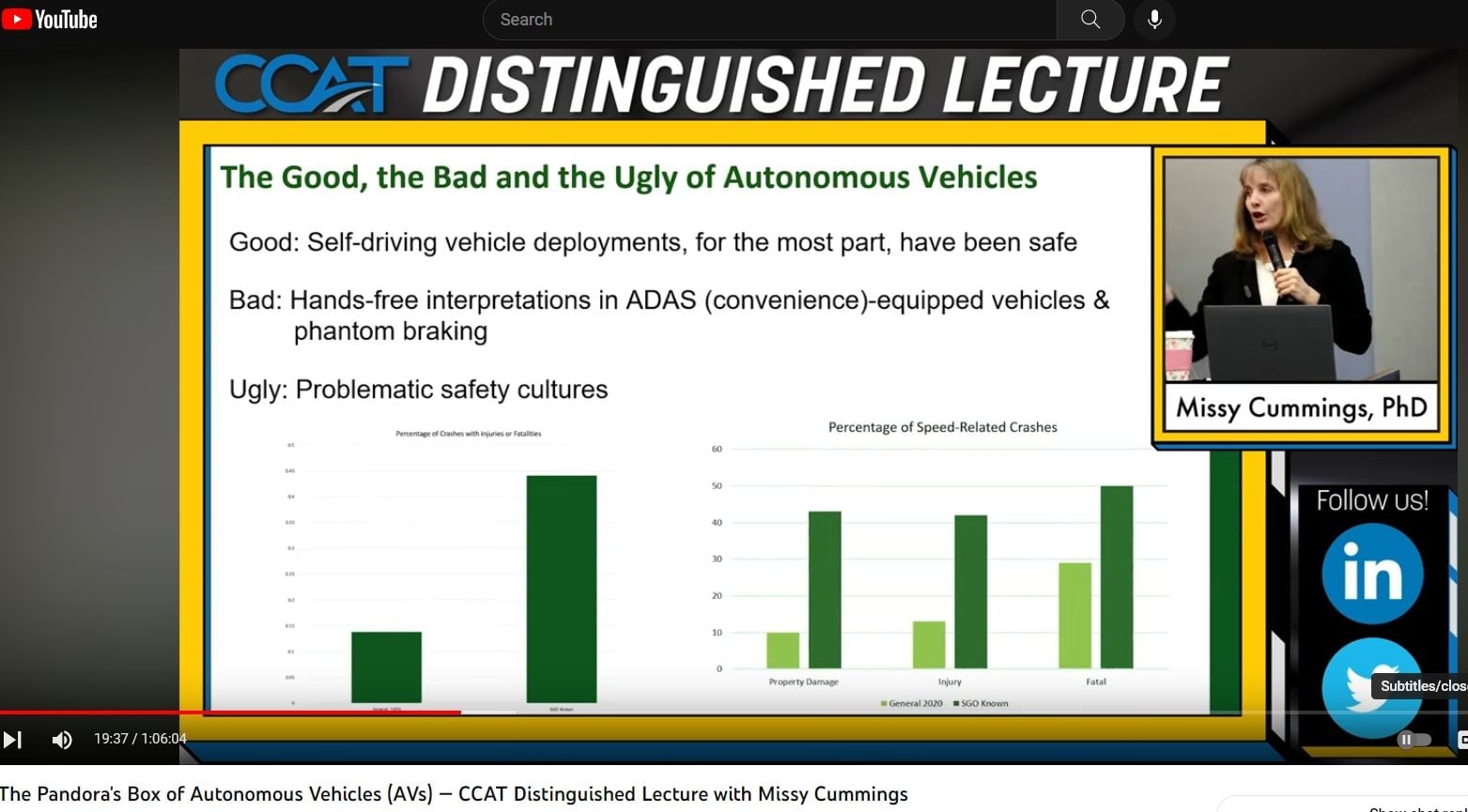

Dr. Missy Cummings, Professor at George Mason University and former Senior Safety Advisor to the National Highway Traffic Safety Administration (NHTSA), joined the CCAT Distinguished Lecture Series to discuss her lessons learned in that role and the use of systems engineering principles.The recent presentation at the Center for Connected and Automated Transportation, University of Michigan Transportation Research Institute may be informative for those interested in why a Tesla with the latest self-driving "Beta" software version didn't stop for pedestrian in crossing. More importantly it addresses issues created when drivers depend on such software to safely drive the car without their input or constant attention It also addresses the issue of manufacturers that demonstrate low regard for safety standards or regulations. It probably will mean nothing to people who prefer to shut out information that doesn't fit their own perception of reality.

A few slides from the presentation are extracted:

The presentation is summarized in an article at Autoblog

Autonomy expert Missy Cummings explains why even driver assists can be trouble

Extract:

Yet the very nature of these systems makes constant vigilance hard, as Dr. Missy Cummings will tell you. Fresh off a New York Times profile, the George Mason University engineering professor, former NHTSA senior adviser for safety, and longtime autonomy and robotics researcher delivered a lecture last week at the University of Michigan’s Center for Connected and Automated Transportation. We’ve attached a video, above, and as college lectures go, it’ll hold your attention.

First, let’s just interject here that when Cummings was appointed to her role at NHTSA, Elon Musk called her “extremely biased against Tesla,” which had the effect of siccing his fans on her — she received death threats from Tesla-stans, and her family had to move out of their home for a time. Now, for a credible understanding of how human beings interact with high-performance technology, who are you going to believe, an auto company CEO, or an Annapolis grad who was one of the Navy’s first female fighter pilots? Only one of these human beings can land an F/A-18 on an aircraft carrier.

When she went to NHTSA, Cummings said, she had “been complaining about NHTSA for years” and relished the chance to attempt fixing it. Musk is no fan of the agency either, so you'd think the enemy of Elon’s enemy is his friend. And the two of them presumably share a common goal: safer cars. Musk might even appreciate her sense of humor. When at Duke University, she named its Humans and Autonomy Lab — HAL.

Yet she had to have a security assessment done on the venue where she gave the U of M lecture. “Trust me, everyone hates me today,” she told the audience. “All the manufacturers, NHTSA, my 15-year-old daughter, everyone hates me.” (Her daughter has her learner’s permit; imagine being taught to drive by one of the foremost authorities on vehicle safety, your mom.)

But, Cummings says, “I’m good with that. I don’t mind being the bad cop because I do think we’re at the most important time in history since we figured out brake lights and headlights. I think that we are in the scariest time in transportation with autonomy and technology.

“I think we’re making some big mistakes.”

Her fat-chance goal is for the perpetually put-upon and glacially paced NHTSA to impose some order on the Wild West of these technologies. Musk put an embryonic FSD on the streets because he could. There was nothing to stop Tesla from beta-testing the system among an unwitting public.

In an analysis Cummings sent NHTSA last fall of driver-assist systems from GM, Ford, Tesla, et al, she determined that cars using these systems that were involved in fatal crashes were traveling over the speed limit in 50% of the cases, while those with serious injuries were speeding in 42% of cases. In crashes that did not involve ADAS, those figures were 29% and 13%. So one simple solution would be for NHTSA to mandate speed limiters on these systems. “The technology is being abused by humans,” she told the Times. “We need to put in regulations that deal with this.”

The technology by definition lulls you. Cummings described an experiment at Duke in which she and other researchers placed 40 test subjects behind the wheel of a driving simulator for a four-hour “trip” using adaptive cruise. At the 2½-hour mark, a moose ambled slowly across the road. Only one test subject had presence enough to avoid the moose — the other 39 clobbered it.

Autopilot, Super Cruise, Blue Cruise ... she's grown to dislike active driver assists that are, or can be tricked into being, hands-free. "They put it on, whatever version of 'autopilot' they have, and then they relax. They relax, because indeed, that's what they've been told." They might be paying attention "for the most part," she said. For the most part is not enough.

As for full autonomy, Cummings laid out a description of the learning curve that humans and now technology have to climb, from first acquiring a basic skill to ultimately full expertise, when we've mastered the skill-based reasoning that helps us know when to break a rule to get out of an unusual situation safely. Handling uncertainty is the hump that technology, which is inherently rules-based, can't seem to get over.

She showed how an autonomous vehicle was stopped in its tracks during testing because it interpreted a movers’ truck as not just a truck, but as a collection of a truck, four poles, traffic signs, a fence, a building, a bus, and “a gigantic person who was about to attack.”

That’s an eight-year-old example, she admits, but “still very much a problem,” as illustrated by the now-infamous phantom-braking crash in the Bay Bridge tunnel in San Francisco last Thanksgiving, in which the driver of a Tesla blamed "Full Self-Driving" for mysteriously changing lanes and then slamming on the brakes, resulting in an eight-car pileup that injured a 2-year-old child.

“And for all you Tesla fanboys who are jumping on Twitter right now so you can attack me, I’m here to tell you that it’s not just a Tesla problem,” she said. “All manufacturers who are dealing in autonomy are dealing with this problem” — her next examples involving GM Cruise cars in San Francisco, including one that apparently attempted to drive through an active firefighting scene. "San Francisco, oh boy, they're at their wits' end with Cruise."

But despite the problems, she also offers praise. "Even though I just complained a lot about Cruise, I am in awe of Cruise and of Waymo and of all the other car companies out there that have not had any major crashes. They have not killed anybody since the Uber issue, So I am very amazed ... I think the self-driving community has done a very good job of policing themselves."

Those are just some highlights. It's a fascinating lecture from someone who seems to be sincerely working to keep you and me safe. And it’s well worth an hour of your time.

Meanwhile, take a cue from AAA and Missy Cummings: Don’t trust, don't let your guard down, don't relax behind the wheel — that’s never been more true than it is now.

The NYT article that discusses the subject and Dr. Cummings experience cited in the Autoblog article above is at:

Carmakers Are Pushing Autonomous Tech. This Engineer Wants Limits.

Relevant to any discussion of so-called autonomous vehicles, is the reaction and rhetoric of some people to any discussion that throws shade on Tesla's cavalier approach to testing "beta software" on public streets.

Extract:

Dr. Cummings has long warned that this can be a problem — in academic papers, in interviews and on social media. She was named senior adviser for safety at NHTSA in October 2021, not long after the agency began collecting crash data involving cars using driver-assistance systems.

Mr. Musk responded to her appointment in a post on Twitter, accusing her of being “extremely biased against Tesla,” without citing any evidence. This set off an avalanche of similar statements from his supporters on social media and in emails to Dr. Cummings.

She said she eventually had to shut down her Twitter account and temporarily leave her home because of the harassment and death threats she was receiving at the time. One threat was serious enough to be investigated by the police in Durham, N.C., where she lived.

Many of the claims were nonsensical and false. Some of Mr. Musk’s supporters noticed that she was serving as a board member of Veoneer, a Swedish company that sells sensors to Tesla and other automakers, but confused the company with Velodyne, a U.S. company whose laser sensor technology — called lidar — is seen as a competitor to the sensors that Tesla uses for Autopilot.

“We know you own lidar companies and if you accept the NHTSA adviser position, we will kill you and your family,” one email sent to her said.

Jennifer Homendy, who leads the National Transportation Safety Board, the agency that investigates serious automobile crashes, and who has also been attacked by fans of Mr. Musk, told CNN Business in 2021 that the false claims about Dr. Cummings were a “calculated attempt to distract from the real safety issues.”